There are few areas where AI has seen more robust deployment than the field of software development. From "vibe" coding to GitHub Copilot to startups building quick-and-dirty applications with support from LLMs, AI is already deeply integrated.

However, those claiming we're mere months away from AI agents replacing most programmers should adjust their expectations because models aren't good enough at the debugging part, and debugging occupies most of a developer's time. That's the suggestion of Microsoft Research, which built a new tool called debug-gym to test and improve how AI models can debug software.

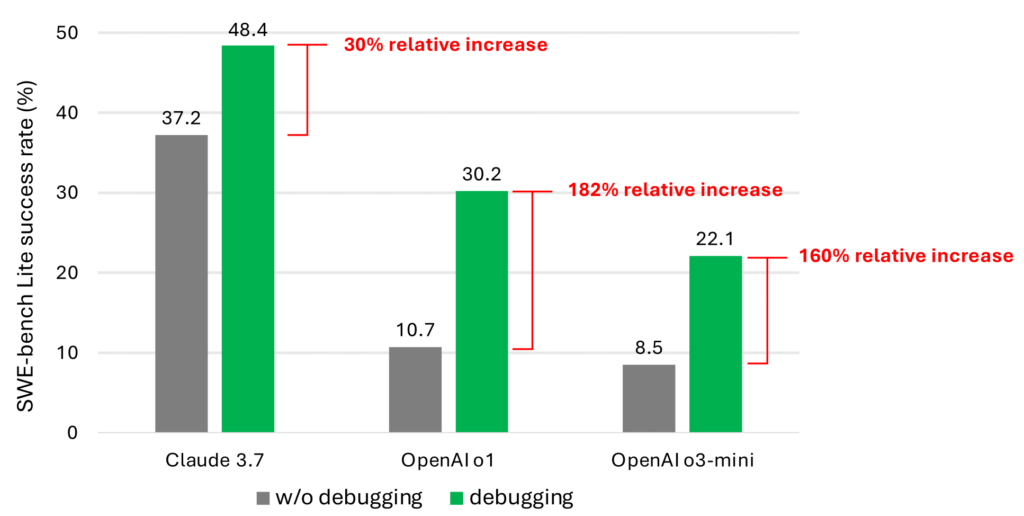

Debug-gym (available on GitHub and detailed in a blog post) is an environment that allows AI models to try and debug any existing code repository with access to debugging tools that aren't historically part of the process for these models. Microsoft found that without this approach, models are quite notably bad at debugging tasks. With the approach, they're better but still a far cry from what an experienced human developer can do.

Here's how Microsoft's researchers describe debug-gym:

Debug-gym expands an agent’s action and observation space with feedback from tool usage, enabling setting breakpoints, navigating code, printing variable values, and creating test functions. Agents can interact with tools to investigate code or rewrite it, if confident. We believe interactive debugging with proper tools can empower coding agents to tackle real-world software engineering tasks and is central to LLM-based agent research. The fixes proposed by a coding agent with debugging capabilities, and then approved by a human programmer, will be grounded in the context of the relevant codebase, program execution and documentation, rather than relying solely on guesses based on previously seen training data.

Pictured below are the results of the tests using debug-gym.

Loading comments...

Loading comments...